Yesterday, the US Supreme Court ruled in favor of internet platforms in two similar cases. Gonzalez v. Google charges that Google’s YouTube algorithms promoted ISIS recruitment videos, which resulted in a terrorist attack in Paris. The family of one of the victims claims that because YouTube sold advertising on the videos, they had supported ISIS. In Twitter v. Taamneh, Twitter was sued by a victim’s family in an ISIS attack in Turkey. The family claims Twitter should have done more to detect and remove ISIS content from their platform. In both cases, the Supreme Court did not find the internet platforms to be liable for the user content posted to them.

What is Section 230?

Section 230 of the Communications Decency Act says internet platforms (think Facebook, Twitter, YouTube) hosting user content are not liable for users’ posts. For example, if a restaurant reviewer posted something defamatory about a new restaurant on Twitter, the restaurant could sue the reviewer for libel, but it couldn’t sue Twitter. Broadly, section 230 upholds that internet platforms cannot be sued for publishing content users post.

At the same time, Section 230 allows internet platforms to moderate content according to their community standards. They can decide what content is acceptable or unacceptable and whether to host, moderate, or restrict access to it.

Section 230 was created, in part, to protect free speech online. If internet companies were responsible for policing what users posted, they would be less likely to post user content. Some exceptions include laws to combat sex trafficking and protect electronic communications privacy.

Section 230 was created in 1996 when internet-based companies and newly-created websites were struggling to grow. Offering a legal shield against user-posted content helped infant tech companies develop into websites with millions or billions of users. Monitoring and approving every piece of content user posts today is impossible. If internet platforms faced legal liability over user-made content, they would no longer exist.

Because of their size, social media platforms shape online communication in a way that didn’t exist when the Communications Decency Act was created. User posts may contain lies, hate speech, or propaganda, which breeds polarization and harms society. Since the platforms have become too big for the companies behind them to monitor each piece of user-generated content, how can we, as a society, use our agency to limit the spread of harmful content while still respecting free speech in the digital space? How can we build the kind of digital world we want, which connects us rather than divides us? Search for Common Ground has a place to start.

Search for Common Ground Pioneering Digital Peacebuilding

Over 1.8 billion social media users, about 40% of everyone using tech platforms, participate in Facebook groups monthly. Platforms operating in the mobile tech space, such as Signal and WhatsApp have a global user base of over 5.34 billion unique users, most of whom participate in groups. In private groups or private channels, users experience violent content, such as hate speech, marginalization, recruitment into extremist groups, or incitement to violence. In response to harmful content, some groups block users or leave the group themselves, but harmful content still proliferates. When this content spills offline into the real world, especially in places experiencing polarization or conflict, it can lead to violence.

Conversely, private groups and channels also offer some of the most effective responses to digital harm. Private groups and channels are managed by administrators or moderators, who have authority and influence over their members. They are in charge of reviewing user-generated content to ensure that content follows the rules and community standards of the social media platform to which it is posted. (Content moderation was a key point in yesterday’s Supreme Court rulings.) On Facebook alone, there are over 70 million group administrators.

Administrators and moderators act as community stewards who are tasked with promoting healthy relationships in online and digital groups. Many administrators and moderators admit they accepted their manager role without fully understanding the scope of their responsibilities. Search for Common Ground recognized the potential group administrators and moderators have to use tech to foster a positive online space. In response, Search created and launched a free training curriculum to train administrators and moderators on how to foster healthy relationships in online community spaces.

Digital Community Stewards

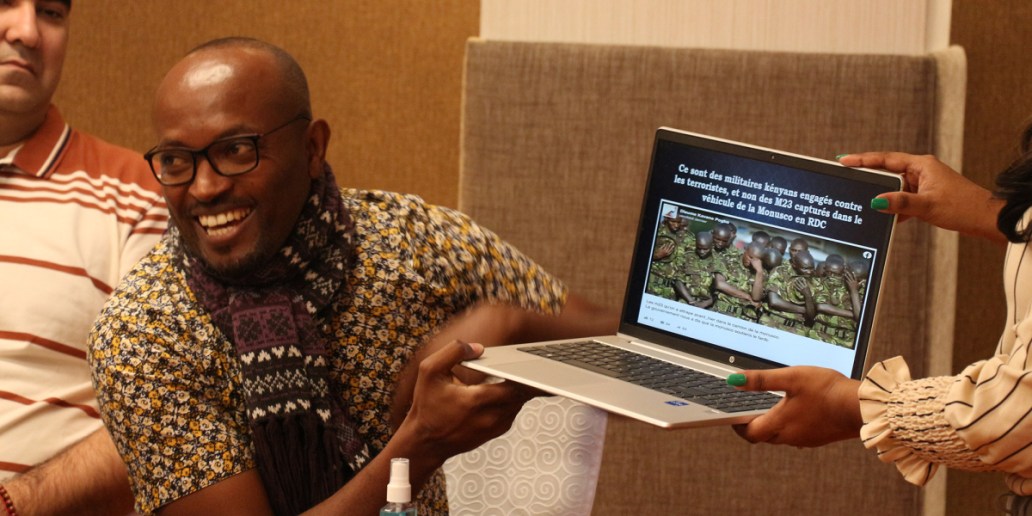

Developed by Search for Common Ground and hosted on the social impact network ConnexUs, the digital community stewards training curriculum includes ten modules to prepare administrators and moderators for the challenges they will encounter as they nurture healthier relationships in their online groups. The training covers topics such as how to cultivate trust and connection in group members’ interactions, how to deal with group members who promote misinformation and disinformation, how to fact check or verify content, and how to keep members safe from harm while respecting freedom of expression.

Before the community stewards training curriculum was launched publicly on ConnexUs, it was piloted using administrators and moderators of both public and private groups on platforms such as Facebook, Instagram, WhatsApp, Twitch, SNAP, Signal, and Telegram. Participants spanned the globe, coming from 13 countries, including Cameroon, Egypt, Indonesia, Iraq, Jordan, Kenya, Kyrgyzstan, Lebanon, Morocco, Nepal, Nigeria, Sierra Leone, and Sri Lanka. This pilot group of community stewards managed large online groups of over 1,000 people. They usually managed more than one group or moderated the same group over multiple platforms. The pilot group of stewards included activists, leaders of civil society organizations, peacebuilders, social workers, and journalists working on issues from women’s economic empowerment to youth and tech to social justice issues.

What did We Learn from the First Community Stewards Training Program?

- Community stewards realized the importance of their influence over the groups they manage.

“When you lead online communities, you will soon understand that it will not remain restricted to certain geographic locations and socioeconomic categories. Online, everyone can join a digital community—not just the people from our country.” – a community steward participant

- The training helped some community stewards realize their full potential.

“I didn’t realize the actual amount of effort and commitment and work that you personally (or I personally) need to put into it to make sure that it’s sustainable or to make sure that you know objectives are achieved. So I think for me, that’s how the perspective has changed about understanding and acknowledging the [stewardship] role and the…power that we have to actually shift perceptions, behaviors, and attitudes, in…the world.” – a community steward participant

- Community stewards believe they can foster social cohesion, but need more tools.

“Probably the [social media] service providers, including Meta and others, should look deeply into what support they are willing to give so that digital community stewards can make use of the platform better…For instance, when you open a group, there could be a space you enter that teaches you how to manage a group, what triggers to look out for hate speech, misinformation, [and] disinformation, so they can learn how to spot fake news, to make things a little easier for moderators and admins.” – a community steward participant

- Community stewards are motivated when their online engagement results in positive offline engagement.

“And what motivates me when boys and girls come to us and thank us for providing a safe space. They post, “Maybe you read this, but I am following you, and it is changing my life because of what you are doing,” and when I read these posts, this is what motivates me. And when we receive these messages, I share it with the volunteers, and it motivates them as well.” – a community steward participant

- Community stewards discovered that online to offline engagement scales impact.

“Because we are helping teenagers, they are talking to their parents, changing their parents’ mindset, and those parents have become our allies.” – a community steward participant.

Yesterday’s Supreme Court decision underscores how important it is that we take responsibility for the online communities we create. When online hate or disinformation leads to real-world violence, it reverses years of development gains, making more people dependent on humanitarian aid to survive. The interdependence of development gains and peacebuilding create a virtuous cycle, as dynamic as it is vulnerable. Search for Common Ground is pioneering peacebuilding in the digital space so that real world peace and development thrive.